Deep Learning: A Profound Understanding of Machine Learning Techniques

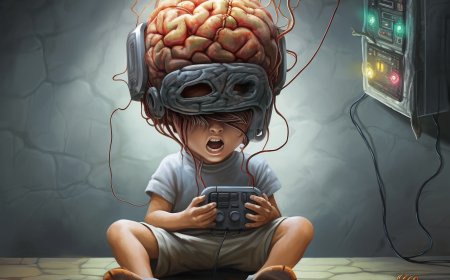

Deep learning, which is a part of machine learning, delves into the complex world of artificial neural networks and their ability to replicate the processes of the human mind in analyzing data. This advanced technology has gained great importance due to its remarkable ability to understand and decode complex patterns within huge data sets. Deep learning techniques, such as imaging neural networks and recursive neural networks, have transformed fields such as image and speech recognition, natural language processing, and recommender systems. By deeply understanding deep learning techniques, we gain insight into its endless potential to drive innovation across a variety of industries, from healthcare to self-driving mobile vehicles, making it an indispensable field in the world of artificial intelligence.

Deep learning is a concept related to machine learning, which is an advanced technological field aimed at representing and modeling intricate data and patterns. This concept refers to the use of artificial neural networks and deep concepts to comprehend and extract knowledge from data. Deep learning serves as the foundation for artificial intelligence and is considered one of the most significant technological achievements in the past decade.

Deep learning techniques represent data using multi-layered neural networks and analyze it in ways that surpass traditional methods. Applications of deep learning include image recognition, speech processing, natural language processing, recommendation systems, and more. A profound understanding of deep learning techniques can reveal the vast potential it offers across various fields and industries.

Deep Learning Concept: Explanation and Meaning

Deep learning is a subfield of machine learning, which, in turn, is a branch of artificial intelligence. It's a term that has garnered significant attention and is often used to describe the core technology behind many cutting-edge applications, particularly in fields like image and speech recognition. Let's explore the mundane intricacies of deep learning to understand its concept, explanation, and meaning.

1. Neural Networks: At the heart of deep learning lies neural networks. These are computational models inspired by the structure and function of the human brain. A neural network comprises layers of interconnected nodes, or neurons, each processing and passing information to the next layer. Deep learning models have multiple hidden layers, hence the term "deep."

2. Learning from Data: Deep learning systems are designed to learn from data, making them highly data-dependent. They require vast amounts of labeled data for training, where the network adjusts its parameters iteratively to minimize the difference between its predictions and the actual data.

3. Feature Extraction: One key advantage of deep learning is its ability to automatically extract relevant features from the data. In traditional machine learning, feature engineering is a time-consuming task, but deep learning models can automatically learn the most important features during training.

4. Complex Representations: Deep learning models create complex hierarchical representations of data. Lower layers often capture simple features, like edges and corners in an image, while higher layers abstract more complex patterns, like recognizing faces or objects.

5. Versatile Applications: Deep learning has found applications in various domains, such as computer vision, natural language processing, speech recognition, and even playing board games like chess and Go. It has pushed the boundaries of what AI systems can achieve.

6. Computational Resources: Training deep learning models requires substantial computational resources, including powerful GPUs and TPUs. The depth and complexity of these networks demand extensive processing power.

7. Deep Learning Algorithms: Various algorithms are used in deep learning, including convolutional neural networks (CNNs) for image analysis, recurrent neural networks (RNNs) for sequential data, and generative adversarial networks (GANs) for creating new data.

8. Interpretability Challenges: Deep learning models are often criticized for their "black-box" nature. Understanding how and why they make specific decisions can be challenging, which is a drawback in fields where interpretability is crucial, like healthcare.

9. Constant Advancements: Deep learning is a dynamic field with ongoing advancements. Researchers are continually developing new architectures and techniques to improve performance, efficiency, and interpretability.

10. Limitations: While deep learning has achieved remarkable success in many areas, it's not a panacea. It can be data-hungry, and its performance may deteriorate when applied to tasks with limited data. It also doesn't possess common-sense reasoning abilities like humans.

deep learning is a subset of machine learning that utilizes neural networks with multiple layers to automatically learn and extract features from data. It has demonstrated remarkable success in a wide range of applications but requires significant computational resources and poses challenges in terms of interpretability. Deep learning continues to be an active and evolving field, pushing the boundaries of what AI systems can achieve.

The History of Deep Learning: Evolution and Previous Applications

The history of deep learning is a long and evolving narrative that traces the development of neural networks and their applications over the years. To truly understand this history, one must delve into the intricate details of its evolution and previous applications.

1. Early Beginnings:

Deep learning has its roots in artificial neural networks, which were initially conceived as early as the 1940s. However, due to limited computational resources and data availability, progress in this field was slow.

2. The AI Winter:

In the 1970s and 1980s, the field of artificial intelligence experienced what is known as the "AI winter," characterized by reduced funding and interest in AI research. During this period, neural networks fell out of favor in favor of rule-based expert systems.

3. Emergence of Backpropagation:

A significant development in the 1980s was the introduction of the backpropagation algorithm, which allowed for more efficient training of neural networks. This laid the groundwork for deeper and more complex networks.

4. Convolutional Neural Networks (CNNs):

In the late 1980s, CNNs were introduced, designed specifically for image processing tasks. These networks use convolutional layers to automatically learn features from image data, making them a breakthrough in computer vision.

5. Recurrent Neural Networks (RNNs):

RNNs, introduced in the early 1990s, were designed for sequential data, such as natural language processing and speech recognition. They allowed networks to maintain memory of previous inputs, enabling tasks like language modeling.

6. The Vanishing Gradient Problem:

One significant challenge in deep learning was the vanishing gradient problem, which hindered training of deep networks. This problem was partially addressed in the 1990s through modifications in the training algorithms.

7. Rise of Big Data:

In the 2000s, the proliferation of the internet and digital technologies led to an explosion in data availability, providing the necessary resources for training deep networks. This data deluge played a pivotal role in the resurgence of deep learning.

8. Breakthrough in Image and Speech Recognition:

Deep learning models, particularly CNNs and RNNs, achieved remarkable success in image and speech recognition tasks, surpassing traditional machine learning methods.

9. Deep Learning in Gaming:

DeepMind's AlphaGo, which utilized deep reinforcement learning, made headlines in 2016 when it defeated the world champion Go player. This event showcased the capabilities of deep learning in strategic decision-making.

10. Autonomous Vehicles and Robotics:

Deep learning found applications in autonomous vehicles, enabling them to navigate and make real-time decisions based on sensor data. It also enhanced robotic systems for various tasks.

11. Healthcare and Drug Discovery:

Deep learning has been used in medical image analysis, disease diagnosis, and drug discovery, aiding in early detection and treatment.

12. Natural Language Processing (NLP):

The advent of transformer models, like BERT and GPT-3, revolutionized NLP tasks, including language translation, sentiment analysis, and chatbots.

13. Ethical and Privacy Concerns:

The increasing use of deep learning has raised ethical concerns related to privacy, bias, and decision interpretability. It has prompted discussions on responsible AI deployment.

14. Future Prospects:

Deep learning continues to evolve, with ongoing research aimed at addressing its limitations, improving interpretability, and extending its applications to new domains.

the history of deep learning is a story of persistence, innovation, and technological advancements. It has seen significant developments, setbacks, and resurgences over the years, ultimately leading to its widespread adoption and transformative impact across various industries. Understanding this history is essential to appreciate the full scope and potential of deep learning in the present and the future.

Machine Learning Architecture: Understanding Layers and Neural Networks

Machine learning architecture, particularly in the context of deep learning, relies on a complex framework of layers and neural networks. Understanding the intricacies of these architectural elements is essential for grasping the functioning of deep learning models.

1. Neurons and Activation Functions:

At the core of neural networks are artificial neurons, which mimic the functioning of biological neurons. Each neuron processes input data and applies an activation function to determine its output. Common activation functions include the sigmoid, ReLU (Rectified Linear Unit), and tanh (hyperbolic tangent) functions.

2. Layers in Neural Networks:

Neural networks are composed of multiple layers, each with a specific role. The most fundamental layers include:

- Input Layer: This layer receives the initial data input and passes it on to the subsequent layers.

- Hidden Layers: These intermediate layers process and transform the data. The number of hidden layers and the neurons within them vary depending on the network's architecture.

- Output Layer: The final layer produces the network's prediction or output based on the processed data.

3. Feedforward Propagation:

The process of data flowing from the input layer through the hidden layers to the output layer is called feedforward propagation. During this process, each neuron in a layer receives the weighted input from the previous layer, applies an activation function, and passes the result to the next layer.

4. Weights and Biases:

Neural networks learn from data by adjusting the weights and biases associated with each neuron. These parameters determine the strength and direction of connections between neurons. The process of adjusting these parameters to minimize prediction errors is known as training.

5. Backpropagation:

Backpropagation is a critical algorithm for training neural networks. It calculates the gradients of the loss function with respect to the model's weights and biases. These gradients are then used to update the model's parameters through techniques like gradient descent.

6. Deep Learning Architectures:

Deep learning often involves models with many hidden layers, which is why they are referred to as deep neural networks. Common deep learning architectures include Convolutional Neural Networks (CNNs) for image analysis and Recurrent Neural Networks (RNNs) for sequential data processing.

7. Convolutional Neural Networks (CNNs):

CNNs are designed for tasks like image recognition. They employ convolutional layers to automatically detect features in images, such as edges and textures.

8. Recurrent Neural Networks (RNNs):

RNNs are specialized for sequential data, making them suitable for tasks like natural language processing and speech recognition. They have memory cells that store information about previous inputs.

9. Long Short-Term Memory (LSTM):

An extension of RNNs, LSTMs are designed to address the vanishing gradient problem and are especially useful for tasks that require learning long-term dependencies in data.

10. Architectural Variations:

Beyond CNNs and RNNs, there are various architectural variations, including autoencoders for unsupervised learning and transformers for natural language processing tasks.

11. Hyperparameter Tuning:

Setting the hyperparameters, such as learning rate, batch size, and the number of neurons in each layer, is crucial for optimizing the performance of a neural network. Hyperparameter tuning involves finding the right combination of settings that lead to the best results.

machine learning architecture is a multi-layered framework with various components, including neurons, layers, and activation functions. The architecture's complexity and its ability to process and learn from data make it a powerful tool in solving a wide range of tasks. Understanding the layers and neural networks within this architecture is essential for designing and training effective deep learning models.

Deep Learning Techniques: CNNs, RNNs, and Deep Neural Networks

Deep learning techniques encompass a range of neural network architectures that have proven to be highly effective in various domains. This detailed explanation will delve into Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Deep Neural Networks (DNNs) to provide a comprehensive understanding of these key components of deep learning.

1. Convolutional Neural Networks (CNNs):

CNNs are a pivotal deep learning technique, primarily employed for tasks involving image processing and recognition. Their architecture is tailored to handle grid-like data, making them ideal for tasks such as image classification, object detection, and facial recognition.

-

Convolutional Layers: CNNs are composed of multiple convolutional layers. These layers employ filters to detect patterns and features within an image. These patterns can be as simple as edges or complex as specific object parts.

-

Pooling Layers: After convolutional layers, pooling layers are used to reduce the spatial dimensions of the data. Common pooling techniques include max-pooling, which selects the maximum value in a region, and average pooling, which computes the average value.

-

Fully Connected Layers: The final layers of a CNN are typically fully connected layers. They process the extracted features to make predictions. For instance, in image classification, these layers map the learned features to the corresponding classes.

2. Recurrent Neural Networks (RNNs):

RNNs are specialized for sequential data and are commonly used in natural language processing, speech recognition, and time series analysis. Unlike feedforward neural networks, RNNs have connections that loop back on themselves, allowing them to maintain a memory of previous inputs.

-

Hidden States: RNNs maintain hidden states that capture information about previous inputs in the sequence. This enables them to consider context and dependencies in sequential data.

-

Vanishing Gradient Problem: RNNs can suffer from the vanishing gradient problem, where gradients become too small during training, making long-term dependencies hard to learn. This has led to the development of variations like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) to mitigate this issue.

3. Deep Neural Networks (DNNs):

DNNs, also known as feedforward neural networks, represent the foundation of deep learning. These networks consist of multiple layers, including an input layer, multiple hidden layers, and an output layer.

-

Feedforward Propagation: DNNs process data in a forward direction, from the input layer to the output layer. Each layer applies a set of weighted connections and activation functions to transform the input data.

-

Backpropagation: Training DNNs involves backpropagation, where the network's predictions are compared to the actual target values, and the gradients of the loss function are computed. These gradients are then used to update the network's parameters, typically via gradient descent algorithms.

-

Deep Architectures: DNNs can be deep, with several hidden layers. The depth of the network enables it to learn hierarchical features, making it suitable for complex tasks like natural language processing and speech recognition.

deep learning techniques encompass CNNs, RNNs, and DNNs, each tailored for specific tasks. CNNs excel in image-related tasks, RNNs are designed for sequential data analysis, and DNNs serve as the foundation of deep learning with their deep architectures. Understanding these techniques is crucial for harnessing the power of deep learning in various applications across fields such as computer vision, natural language processing, and data analysis.

Applications of Deep Learning in Image and Audio Processing

Deep learning has revolutionized the fields of image and audio processing with its ability to extract intricate patterns and features from data. This discussion will provide an in-depth exploration of the applications of deep learning in these domains.

Image Processing with Deep Learning:

Deep learning techniques have become indispensable in image processing due to their unparalleled ability to understand and manipulate visual data. Some notable applications include:

1. Image Classification: Deep Convolutional Neural Networks (CNNs) are extensively employed for image classification tasks. They can accurately categorize images into predefined classes, making them crucial for applications like object recognition and content-based image retrieval.

2. Object Detection: CNNs equipped with object detection algorithms can identify and locate objects within an image. This technology is widely used in autonomous vehicles, surveillance systems, and robotics.

3. Facial Recognition: Deep learning models have advanced facial recognition technology, enabling security systems, social media platforms, and mobile devices to recognize and authenticate users based on their facial features.

4. Image Generation: Generative Adversarial Networks (GANs) are used to create realistic images, making them valuable for artistic expression, entertainment, and even data augmentation for training datasets.

5. Medical Imaging: Deep learning aids in the analysis of medical images such as X-rays, MRIs, and CT scans. It can assist in diagnosing diseases, identifying abnormalities, and planning medical treatments.

Audio Processing with Deep Learning:

Deep learning has also made significant contributions to audio processing, enabling the development of applications that were previously challenging to achieve:

1. Speech Recognition: Recurrent Neural Networks (RNNs) and their variants, such as Long Short-Term Memory (LSTM), have greatly improved automatic speech recognition (ASR) systems. These systems can transcribe spoken language into text, powering voice assistants like Siri and Google Assistant.

2. Music Generation: Deep learning models like recurrent neural networks (RNNs) and Transformers can generate music, imitating the styles of famous composers or creating entirely new compositions. This is used in the entertainment industry and music production.

3. Sound Classification: Deep learning is used to classify and analyze sounds. For example, it can distinguish between different types of environmental noises for noise pollution monitoring or identify specific instruments in musical recordings.

4. Sentiment Analysis in Voice: Deep learning models can analyze the sentiment and emotional tone in spoken language. This technology is valuable in customer service for assessing customer satisfaction or in mental health applications for monitoring emotional states.

5. Noise Reduction: Deep learning models can enhance the quality of audio signals by removing unwanted noise, making them useful in applications like telecommunication and audio restoration.

deep learning has had a profound impact on image and audio processing. In image processing, CNNs have revolutionized image classification, object detection, facial recognition, and more. In audio processing, RNNs and other deep learning models have significantly improved speech recognition, music generation, sound classification, sentiment analysis, and noise reduction. These applications are just a glimpse of the vast potential of deep learning in enhancing visual and auditory data analysis.

Using Deep Learning in Machine Translation and Natural Language Processing

Deep learning has made significant contributions to machine translation and natural language processing (NLP) by enabling more accurate and context-aware language understanding and generation. In this comprehensive exploration, we will delve into the applications of deep learning in these domains.

Machine Translation with Deep Learning:

Machine translation is the process of automatically translating text or speech from one language to another. Deep learning, especially the use of neural machine translation (NMT), has transformed the quality of automated translation. Here's a detailed look at the key components and applications:

-

Sequence-to-Sequence Models: Deep learning models, such as Recurrent Neural Networks (RNNs) and Transformer models, have revolutionized machine translation. These sequence-to-sequence models can handle variable-length input sequences and produce output sequences, making them highly adaptable for translation tasks.

-

Attention Mechanisms: Attention mechanisms, particularly the self-attention mechanism in Transformers, have significantly improved translation accuracy. They allow the model to focus on relevant parts of the source text when generating the target text, resulting in more contextually accurate translations.

-

Parallel Corpora: Deep learning models for machine translation require large parallel corpora, which are collections of texts in two or more languages. These corpora are used for training and fine-tuning translation models to capture language nuances and idiomatic expressions.

-

Multilingual Translation: Deep learning models can be trained to perform multilingual translation, enabling translation between multiple languages. This is invaluable for cross-lingual communication and global businesses.

-

Real-time Translation: Mobile applications and online platforms leverage deep learning for real-time translation. Users can speak or type in one language, and the application provides instant translations in their preferred language.

Natural Language Processing (NLP) with Deep Learning:

NLP is the field that focuses on the interaction between humans and computers through natural language. Deep learning has brought remarkable progress to NLP, offering solutions to various tasks and challenges. Here are some of the key aspects:

-

Named Entity Recognition (NER): Deep learning models can identify and classify named entities in text, such as names of people, organizations, and locations. This is vital for information retrieval and knowledge extraction.

-

Sentiment Analysis: Deep learning techniques are used to analyze and classify sentiment in text data, which is essential for understanding customer opinions, social media trends, and product reviews.

-

Question Answering: Deep learning models are employed for question-answering systems that can extract answers from a large corpus of text, such as encyclopedias or websites.

-

Chatbots and Virtual Assistants: Many chatbots and virtual assistants, such as those used in customer support or on websites, are powered by deep learning models. These models can understand and generate human-like responses.

-

Language Understanding and Generation: Deep learning is used for tasks like language understanding and generation, including text summarization, language translation, and content generation for marketing or news articles.

-

Topic Modeling: Deep learning models can discover underlying topics within a large collection of text data, aiding in content recommendation and organization.

-

Language Models: Pre-trained deep learning language models, such as GPT-3, are capable of understanding and generating human-like text, which has applications in a wide range of NLP tasks.

deep learning has significantly improved machine translation and natural language processing by enabling context-aware language understanding and generation. It has revolutionized translation with sequence-to-sequence models and attention mechanisms, making real-time and multilingual translation possible. In NLP, deep learning enhances tasks such as named entity recognition, sentiment analysis, question answering, chatbots, language understanding, and generation, topic modeling, and the development of advanced language models. The continued integration of deep learning in these fields promises further advancements in automated language processing and communication.

Complex Challenges and Problems in Deep Learning

Deep learning, while a powerful and versatile technology, is not without its share of complex challenges and problems. In this exhaustive discussion, we will delve into some of the key difficulties and issues that researchers and practitioners encounter in the field of deep learning.

1. Data Availability and Quality: One of the fundamental challenges in deep learning is the availability and quality of data. Deep learning models require large volumes of labeled data for training. Obtaining such data can be a laborious and expensive process, especially in domains where expert annotation is necessary. Moreover, data quality is critical, as noisy or biased data can lead to suboptimal model performance.

2. Overfitting: Overfitting is a common problem in deep learning, where a model becomes too specialized in learning the training data and performs poorly on new, unseen data. Addressing overfitting requires techniques like regularization and early stopping. Balancing model complexity and data size is a delicate trade-off.

3. Model Complexity: Deep learning models, particularly deep neural networks, can be extremely complex with millions or even billions of parameters. This complexity can make training and fine-tuning challenging, demanding substantial computational resources.

4. Computational Resources: The computational demands of deep learning are substantial. Training deep neural networks often requires powerful hardware, such as Graphics Processing Units (GPUs) or even specialized hardware like TPUs (Tensor Processing Units). Scaling up deep learning models for large-scale applications can be costly.

5. Interpretability: Deep learning models are often considered as "black boxes." They can make highly accurate predictions, but understanding why a model made a particular decision can be challenging. Interpretable deep learning models are an active area of research.

6. Ethical Concerns: Ethical concerns surrounding deep learning include bias in AI systems, privacy issues, and potential misuse of AI technology. Addressing these concerns and ensuring fairness and accountability in AI systems is a priority.

7. Generalization: Achieving strong generalization, where a model performs well on unseen data, is a persistent challenge. Researchers continually seek methods to improve model generalization.

8. Adversarial Attacks: Deep learning models can be vulnerable to adversarial attacks, where small, carefully crafted perturbations to input data can lead to incorrect predictions. Developing robust models against adversarial attacks is an ongoing challenge.

9. Long Training Times: Training deep learning models can be time-consuming, sometimes taking days or even weeks. This lengthy training time can hinder rapid experimentation and model development.

10. Limited Data Efficiency: Deep learning models often require massive amounts of data to perform well. Improving the data efficiency of these models is a critical challenge, especially in domains where data collection is limited.

challenges, from data availability and overfitting to model complexity, interpretability, ethical concerns, and adversarial attacks. Researchers and practitioners continue to work on addressing these challenges, as the field of deep learning evolves and plays a crucial role in various domains, including computer vision, natural language processing, and reinforcement learning.

The Impact of Deep Learning on Artificial Intelligence and Technology

The impact of deep learning on artificial intelligence and technology has been substantial and transformative, with far-reaching implications across various domains. In this thorough exploration, we will dissect the multifaceted influence that deep learning has had on the field of AI and its broader technological applications.

1. Advancements in AI Performance:

Deep learning has significantly advanced the field of artificial intelligence by pushing the boundaries of what AI systems can achieve. Deep neural networks, with their ability to learn complex patterns and representations from data, have enabled breakthroughs in tasks such as image recognition, natural language understanding, and speech recognition. This has led to more capable and human-like AI applications.

2. Computer Vision and Image Processing:

One of the most evident impacts of deep learning is in computer vision. Convolutional Neural Networks (CNNs), a class of deep neural networks, have revolutionized image recognition and object detection. This technology is used in applications like facial recognition, autonomous vehicles, medical image analysis, and more.

3. Natural Language Processing:

In the realm of natural language processing, deep learning models, particularly Recurrent Neural Networks (RNNs) and Transformer models, have dramatically improved language understanding and generation. This has resulted in the development of chatbots, language translation tools, sentiment analysis, and text summarization systems.

4. Improved Decision-Making:

Deep learning has empowered AI systems to make more informed decisions based on data. This has applications in recommendation systems, personalized marketing, and even in autonomous decision-making in self-driving cars and industrial automation.

5. Healthcare and Biomedical Applications:

Deep learning has made significant inroads into the healthcare sector, enabling the development of diagnostic tools, disease detection models, and drug discovery applications. Deep learning models can analyze medical images, identify anomalies, and aid healthcare professionals in making more accurate diagnoses.

6. Financial Services:

In finance, deep learning is used for fraud detection, algorithmic trading, and risk assessment. These models can analyze vast amounts of financial data, detect irregular patterns, and make real-time decisions to mitigate risks.

7. Speech Recognition and Synthesis:

Voice assistants like Siri and Google Assistant rely on deep learning models for speech recognition and synthesis. These applications have improved voice-based interactions and accessibility features.

8. Autonomous Systems:

Deep learning plays a critical role in enabling autonomous systems, such as self-driving cars and drones. These technologies use deep neural networks to perceive and interpret their surroundings, making real-time decisions for navigation and safety.

9. Personalization and Recommendations:

E-commerce, streaming services, and social media platforms utilize deep learning to provide personalized recommendations to users. These recommendation systems analyze user behavior and preferences to offer tailored content.

10. Scientific Research:

Deep learning has accelerated scientific research by assisting in data analysis, simulations, and pattern recognition in various scientific domains, from astrophysics to genomics.

the impact of deep learning on artificial intelligence and technology is pervasive and transformative. It has revolutionized computer vision, natural language processing, healthcare, finance, autonomous systems, and many other domains. As deep learning continues to evolve and mature, its applications are likely to expand further, reshaping the technological landscape and enhancing AI's capabilities across industries.

Future Research Directions and Applications in Deep Learning

Future research directions and applications in the field of deep learning continue to evolve as the technology matures and becomes increasingly integrated into various domains. Deep learning, a subset of machine learning, focuses on neural networks with multiple hidden layers to model and process data. As we delve into the potential areas of exploration and application, it's essential to consider the exciting prospects and challenges that lie ahead.

1. Explainable AI (XAI):

One key direction in deep learning is addressing the issue of model interpretability. As deep learning models, particularly deep neural networks, are often considered as black boxes, understanding how they arrive at their predictions is critical, especially in fields such as healthcare, finance, and autonomous driving. Future research aims to develop more transparent and interpretable deep learning models, allowing users to trust and comprehend their decision-making processes.

2. Self-Supervised Learning:

Self-supervised learning methods have gained prominence in recent years, mainly because they can learn from unlabeled data efficiently. This direction in deep learning involves exploring more sophisticated techniques for creating and training self-supervised models, which can reduce the dependence on labeled data and pave the way for applications in areas where data annotation is expensive or impractical.

3. Reinforcement Learning (RL):

Deep reinforcement learning has shown remarkable success in various fields, such as robotics and gaming. Future research will likely focus on making RL algorithms more sample-efficient, robust, and safe, addressing challenges like exploration-exploitation trade-offs and catastrophic forgetting.

4. Multimodal Learning:

Deep learning is increasingly used in tasks that involve multiple data modalities, such as text, images, and audio. Future work will involve enhancing the capacity of models to effectively learn and integrate information from various modalities, leading to applications in multimedia content analysis, healthcare diagnostics, and more.

5. Transfer Learning and Pre-trained Models:

The use of pre-trained models, such as BERT and GPT-3, has become a standard practice in natural language processing. Future research may revolve around developing more efficient transfer learning techniques and domain adaptation methods to make these models more applicable to diverse domains and tasks.

6. Hardware Acceleration:

Deep learning models, particularly deep neural networks, demand substantial computational resources. Future research will continue to focus on hardware acceleration techniques, such as GPUs, TPUs, and specialized AI hardware, to make deep learning more accessible and efficient.

7. Edge and IoT Applications:

The deployment of deep learning models on edge devices, including Internet of Things (IoT) devices, is an emerging area of research. Optimizing deep learning models for resource-constrained environments and ensuring real-time processing capabilities will be essential for applications like smart cities and autonomous systems.

8. Natural Language Understanding:

Improving natural language understanding, generation, and dialogue systems is a vital research direction. This involves developing models that can grasp nuances in human language, engage in meaningful conversations, and accurately generate text in various languages.

9. Healthcare and Biomedical Applications:

Deep learning holds immense potential in revolutionizing healthcare, from disease diagnosis to drug discovery. Future research will focus on building models that can analyze medical images, predict patient outcomes, and aid in personalized treatment plans.

10. Environmental Monitoring and Climate Prediction:

Deep learning can also be applied to environmental sciences for tasks like climate modeling, disaster prediction, and ecological monitoring. Future research will likely aim to enhance the accuracy and scalability of models in these applications.

the future of deep learning research and applications is a dynamic landscape, with prospects for more robust and interpretable models, wider applicability across domains, and improved efficiency in resource usage. As the field continues to advance, interdisciplinary collaboration and ethical considerations will remain crucial in harnessing the full potential of deep learning.

In conclusion

It can be said that deep learning represents a significant breakthrough in the world of technology and artificial intelligence. It provides us with the ability to understand and utilize data in deeper and more precise ways than ever before. Through deep learning techniques, we find that improving performance in virtual reality applications, image recognition, natural language processing, recommendations, and many other fields has become more achievable. A profound understanding of these techniques can help in developing new solutions and enhancing performance across a wide range of applications and industries. Deep learning is undoubtedly a key to the future of technology and artificial intelligence, and the future opportunities and possibilities appear promising and astounding.

What's Your Reaction?